Distributed Representation

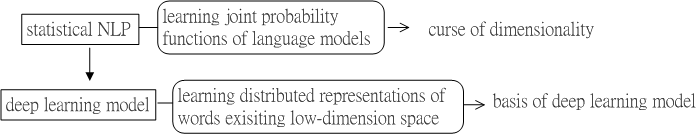

Fig.1 statistical NLP v.s. deep learning model

Fig.1 shows the mechanism improvement from learning joint probability to distributed representation.

Statistical NLP has emerged as the primary option for modeling natural language tasks.

However, it often used to suffer from the "curse of dimensionality" while learning joint probability functions of language models. This led to the motivation of learning distributed representations of words existing in low-dimensional space.

The concept of distributed representation is the basis of deep learning model.

[0]

Young, Tom, et al. "Recent trends in deep learning based natural language processing." arXiv preprint arXiv:1708.02709 (2017).