1 # Copyright 2015 The TensorFlow Authors. All Rights Reserved.

2 #

3 # Licensed under the Apache License, Version 2.0 (the "License");

4 # you may not use this file except in compliance with the License.

5 # You may obtain a copy of the License at

6 #

7 # http://www.apache.org/licenses/LICENSE-2.0

8 #

9 # Unless required by applicable law or agreed to in writing, software

10 # distributed under the License is distributed on an "AS IS" BASIS,

11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

12 # See the License for the specific language governing permissions and

13 # limitations under the License.

14 # ==============================================================================

15

16

17 """Utilities for parsing PTB text files."""

18 from __future__ import absolute_import

19 from __future__ import division

20 from __future__ import print_function

21

22 import collections

23 import os

24 import sys

25

26 import tensorflow as tf

27

28 Py3 = sys.version_info[0] == 3

29

30 def _read_words(filename):

31 with tf.gfile.GFile(filename, "r") as f:

32 if Py3:

33 return f.read().replace("\n", "<eos>").split()

34 else:

35 return f.read().decode("utf-8").replace("\n", "<eos>").split()

36

37

38 def _build_vocab(filename):

39 data = _read_words(filename)

40

41 counter = collections.Counter(data)

42 count_pairs = sorted(counter.items(), key=lambda x: (-x[1], x[0]))

43

44 words, _ = list(zip(*count_pairs))

45 word_to_id = dict(zip(words, range(len(words))))

46

47 return word_to_id

48

49

50 def _file_to_word_ids(filename, word_to_id):

51 data = _read_words(filename)

52 return [word_to_id[word] for word in data if word in word_to_id]

53

54

55 def ptb_raw_data(data_path=None):

56 """Load PTB raw data from data directory "data_path".

57

58 Reads PTB text files, converts strings to integer ids,

59 and performs mini-batching of the inputs.

60

61 The PTB dataset comes from Tomas Mikolov's webpage:

62

63 http://www.fit.vutbr.cz/~imikolov/rnnlm/simple-examples.tgz

64

65 Args:

66 data_path: string path to the directory where simple-examples.tgz has

67 been extracted.

68

69 Returns:

70 tuple (train_data, valid_data, test_data, vocabulary)

71 where each of the data objects can be passed to PTBIterator.

72 """

73

74 train_path = os.path.join(data_path, "ptb.train.txt")

75 valid_path = os.path.join(data_path, "ptb.valid.txt")

76 test_path = os.path.join(data_path, "ptb.test.txt")

77

78 word_to_id = _build_vocab(train_path)

79 train_data = _file_to_word_ids(train_path, word_to_id)

80 valid_data = _file_to_word_ids(valid_path, word_to_id)

81 test_data = _file_to_word_ids(test_path, word_to_id)

82 vocabulary = len(word_to_id)

83 return train_data, valid_data, test_data, vocabulary

84

85

86 def ptb_producer(raw_data, batch_size, num_steps, name=None):

87 """Iterate on the raw PTB data.

88

89 This chunks up raw_data into batches of examples and returns Tensors that

90 are drawn from these batches.

91

92 Args:

93 raw_data: one of the raw data outputs from ptb_raw_data.

94 batch_size: int, the batch size.

95 num_steps: int, the number of unrolls.

96 name: the name of this operation (optional).

97

98 Returns:

99 A pair of Tensors, each shaped [batch_size, num_steps]. The second element

100 of the tuple is the same data time-shifted to the right by one.

101

102 Raises:

103 tf.errors.InvalidArgumentError: if batch_size or num_steps are too high.

104 """

105 with tf.name_scope(name, "PTBProducer", [raw_data, batch_size, num_steps]):

106 raw_data = tf.convert_to_tensor(raw_data, name="raw_data", dtype=tf.int32)

107

108 data_len = tf.size(raw_data)

109 batch_len = data_len // batch_size

110 data = tf.reshape(raw_data[0 : batch_size * batch_len],

111 [batch_size, batch_len])

112

113 epoch_size = (batch_len - 1) // num_steps

114 assertion = tf.assert_positive(

115 epoch_size,

116 message="epoch_size == 0, decrease batch_size or num_steps")

117 with tf.control_dependencies([assertion]):

118 epoch_size = tf.identity(epoch_size, name="epoch_size")

119

120 i = tf.train.range_input_producer(epoch_size, shuffle=False).dequeue()

121 x = tf.strided_slice(data, [0, i * num_steps],

122 [batch_size, (i + 1) * num_steps])

123 x.set_shape([batch_size, num_steps])

124 y = tf.strided_slice(data, [0, i * num_steps + 1],

125 [batch_size, (i + 1) * num_steps + 1])

126 y.set_shape([batch_size, num_steps])

127 return x, y

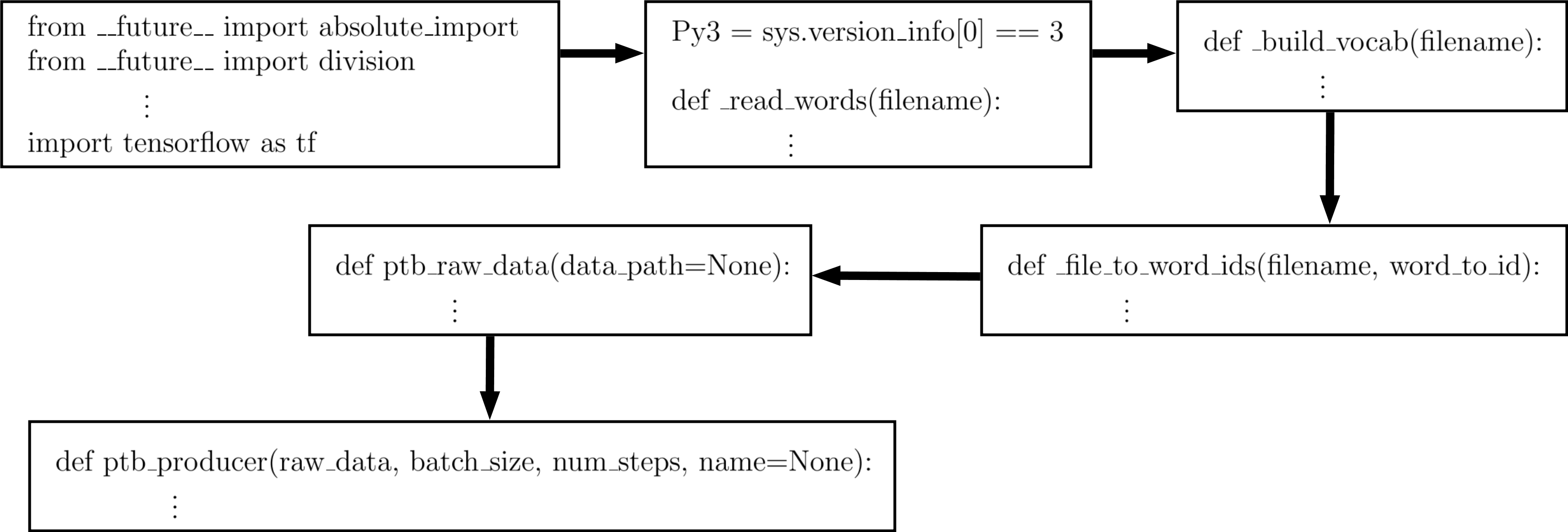

Figure. 1: Flow chart of reader.py file

7 module (absolute_import, import_division, print_funtion, collections, os, sys, and tensorflow) are imported.

function _read_words is defined.

function _build_vocab is defined.

function _file_to_word_ids is defined.

function ptb_raw_data is defined.

function ptb_producer is defined.