Transcribe_file_with_word_time_offsets Code Implementation

Fig.1 應用noise reduction與google cloud speech-to-text service將wav檔轉譯成有時間標籤的字元序列

Fig.1 應用noise reduction與google cloud speech-to-text service將wav檔轉譯成有時間標籤的字元序列

Fig.1 呈現transcribe_file_with_word_offsets.py的high-level流程圖。輸入音頻,輸出音頻辨識結果。

Fig.2

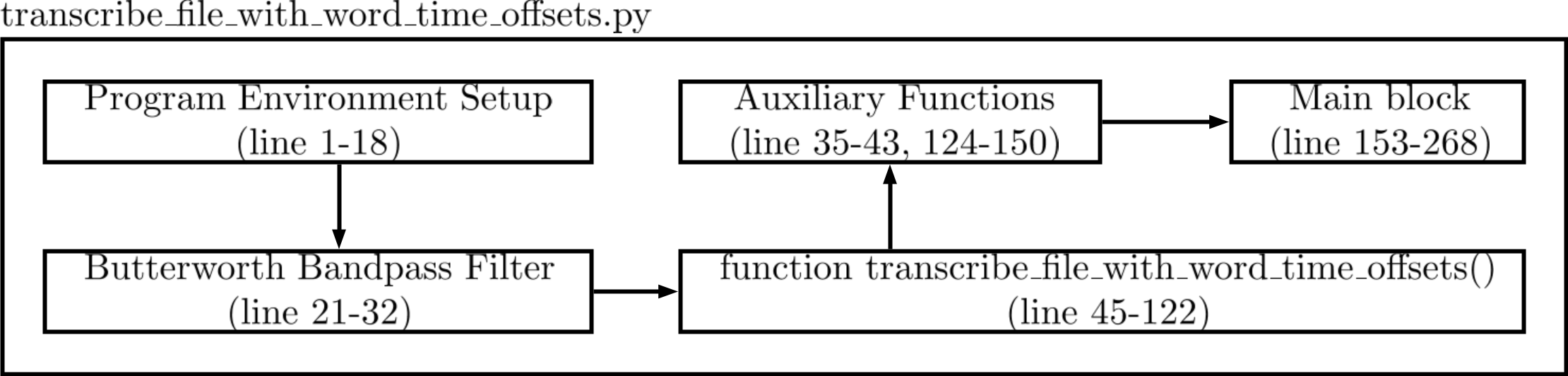

Fig.2 transcribe_file_with_word_time_offsets.py mid-level流程圖

Fig.2 呈現transcribe_file_with_word_time_offsets.pymid-level的流程圖。

行1-18是Program Environment Setup區塊,

行21-32是Butterworth Bandpass Filter,

行45-122是函數transcribe_file_with_word_time_offsets(),

行35-43,124-150是Auxiliary Functions,

行153-268是Main block。

python transcribe_file_with_word_time_offsets.py --beta --batch_size 25 --batch_idx <batch range> --input_dir <input data folder>

指令1在Terminal的呼叫方式

001 #!/usr/bin/env python3

002 # -*- coding: utf-8 -*-

003 """

004 Created on Thu Aug 9 06:02:41 2018

005

006 @author: petertsai

007 """

008 import sys

009 import io

010 import os

011 import glob

012 import re

013 import numpy as np

014 import pandas as pd

015 import argparse

016 from openpyxl import load_workbook

017 from scipy.io import wavfile

018 from scipy.signal import butter, lfilter

019

020

021 def butter_bandpass(lowcut, highcut, fs, order=5):

022 nyq = 0.5 * fs

023 low = lowcut / nyq

024 high = highcut / nyq

025 b, a = butter(order, [low, high], btype='band')

026 return b, a

027

028

029 def butter_bandpass_filter(data, lowcut, highcut, fs, order=5):

030 b, a = butter_bandpass(lowcut, highcut, fs, order=order)

031 y = lfilter(b, a, data)

032 return y

033

034

035 def parse_filepath(speech_file):

036 if sys.platform == 'win32':

037 m = re.match(r'(.+)\\(.+)', speech_file)

038 else:

039 m = re.match(r'(.+)/(.+)', speech_file)

040

041 path = m.group(1)

042 filename = m.group(2)

043 return path, filename

044

045 def transcribe_file_with_word_time_offsets(speech_file, timestamp_writer, language_code='cmn-Hant-TW', beta=False, alternative_language= None):

046 """Transcribe the given audio file synchronously and output the word time

047 offsets."""

048 samp_freq, _ = wavfile.read(speech_file)

049 if beta:

050 from google.cloud import speech_v1p1beta1 as speech

051 from google.cloud.speech_v1p1beta1 import enums

052 from google.cloud.speech_v1p1beta1 import types

053

054 config = types.RecognitionConfig(

055 encoding=enums.RecognitionConfig.AudioEncoding.LINEAR16,

056 sample_rate_hertz=samp_freq,

057 language_code=language_code,

058 #alternative_language_codes=['en-US'],

059 alternative_language_codes = alternative_language,

060 enable_word_time_offsets=True,

061 speech_contexts=[types.SpeechContext(

062 phrases=['四', '三', '二', '一'],

063 )],

064 enable_word_confidence=True

065 )

066

067 else:

068 from google.cloud import speech

069 from google.cloud.speech import enums

070 from google.cloud.speech import types

071

072 config = types.RecognitionConfig(

073 encoding=enums.RecognitionConfig.AudioEncoding.LINEAR16,

074 sample_rate_hertz=samp_freq,

075 language_code=language_code,

076 enable_word_time_offsets=True,

077 speech_contexts=[types.SpeechContext(

078 phrases=['四', '三', '二', '一'],

079 )],

080 #enable_word_confidence=True

081 )

082

083 client = speech.SpeechClient()

084

085 with io.open(speech_file, 'rb') as audio_file:

086 content = audio_file.read()

087

088 audio = types.RecognitionAudio(content=content)

089

090 response = client.recognize(config, audio)

091

092 _, filename = parse_filepath(speech_file)

093 n = re.match(r'C(.+)\.wav', filename)

094 audio_index = n.group(1)

095 results = []

096 for i, result in enumerate(response.results):

097 alternative = result.alternatives[0]

098 print(u'Transcript {}_{}: {}'.format(audio_index, i+1, alternative.transcript))

099 results.append(alternative.transcript)

100

101 word_index = []

102 start_time_column = []

103 end_time_column = []

104 confidences_list = []

105 for word_info in alternative.words:

106 word = word_info.word

107 start_time = word_info.start_time

108 end_time = word_info.end_time

109 word_index.append(word)

110 start_time_column.append(start_time.seconds + start_time.nanos * 1e-9)

111 end_time_column.append(end_time.seconds + end_time.nanos * 1e-9)

112 if hasattr(word_info, 'confidence'):

113 confidences_list.append(word_info.confidence)

114 else:

115 confidences_list.append(float('nan'))

116

117 df = pd.DataFrame({'start_time':start_time_column, 'end_time':end_time_column, 'confidence':confidences_list}, index=word_index)

118 #print(df)

119 df.index.name = 'word'

120 df.to_excel(timestamp_writer,'{}_{}'.format(filename, i+1))

121

122 return results

123

124 def batch_transcribe_speechFile(speechFileQueue,existSpeechFileList,choices_list,timestamp_writer, nr, beta=True):

125 #print('speech files in Queue:')

126 #print(speechFileQueue)

127 for i, speechFile in enumerate(speechFileQueue):

128

129 if nr:

130 ''' audio noise reduction '''

131 path, filename = parse_filepath(speechFile)

132 ofilename = os.path.join(path,'nr',filename)

133 if not os.path.isfile(ofilename):

134 samp_freq, x = wavfile.read(speechFile)

135 y = butter_bandpass_filter(x, 300, 3000, samp_freq, order=6)

136 y = y.astype(x.dtype)

137 wavfile.write(ofilename, samp_freq, y)

138

139 else:

140 ofilename = speechFile

141

142 ''' transcription '''

143 choices = transcribe_file_with_word_time_offsets(ofilename, timestamp_writer, beta=beta)

144 choices_list.append(choices)

145

146 existSpeechFileList.append(speechFile)

147 df = pd.DataFrame({'filename':existSpeechFileList, 'sentence':choices_list})

148 df = df.sort_values(by=['filename'])

149 df.to_csv(output_csv_filename)

150 timestamp_writer.save()

151

152

153 if __name__ == '__main__':

154

155 os.environ["GOOGLE_APPLICATION_CREDENTIALS"]="GC-AI-Challenge-4dc21e90cad0.json"

156 parser = argparse.ArgumentParser(

157 description=__doc__,

158 formatter_class=argparse.RawDescriptionHelpFormatter)

159

160 parser.add_argument('--beta', dest='beta', action='store_true')

161 parser.add_argument('--no-beta', dest='beta', action='store_false')

162 parser.add_argument('--nr', dest='nr', action='store_true')

163 parser.add_argument('--no-nr', dest='nr', action='store_false')

164 parser.add_argument('--reset', dest='reset', action='store_true')

165 parser.add_argument('--no-reset', dest='reset', action='store_false')

166

167

168

169 parser.add_argument('--batch_idx', dest='batch_idx', help='[1-60]')

170 parser.add_argument('--batch_size', dest='batch_idx', help='default 25')

171 parser.add_argument('--input_dir', dest='input_dir', help='input wav folder')

172 parser.set_defaults(beta=True, nr=True, reset=False, batch_idx='0', batch_size=25, input_dir='choices(entire)')

173

174

175 args = parser.parse_args()

176 batch_size = args.batch_size

177 string_batch_range = args.batch_idx

178 input_dir = args.input_dir

179

180 input_nr_dir = os.path.join(input_dir,'nr')

181 if not os.path.isdir(input_nr_dir):

182 os.makedirs(input_nr_dir)

183

184 output_dir= 'result_'+ input_dir

185 if not os.path.isdir(output_dir):

186 os.makedirs(output_dir)

187

188 entireSpeechFileList = sorted(glob.glob(os.path.join(input_dir,'*.wav')))

189 #print('entire speech files in folder {}'.format(input_dir))

190 #print(entireSpeechFileList)

191

192 if re.search('-', string_batch_range):

193 rr = re.match(r'([0-9]*)-([0-9]*)', string_batch_range)

194 if rr.group(1):

195 begin_idx = int(rr.group(1)) - 1

196 else:

197 begin_idx = 0

198 if rr.group(2):

199 end_idx = int(rr.group(2))

200 else:

201 end_idx = int(np.ceil(len(entireSpeechFileList)/batch_size))

202 else:

203 rr = re.match(r'([0-9]*)', string_batch_range)

204 if int(rr.group(1)) != 0:

205 begin_idx = int(rr.group(1))

206 end_idx = int(rr.group(1)) + 1

207 else:

208 begin_idx = 0

209 end_idx = int(np.ceil(len(entireSpeechFileList)/batch_size))

210

211

212 print('beta:', args.beta)

213 if args.beta:

214 suffix = 'beta'

215 else:

216 suffix = ''

217 print('suffix:', suffix)

218

219 print('nr:', args.nr)

220 if args.nr:

221 suffix1 = 'nr'

222 else:

223 suffix1 = ''

224 print('suffix1:', suffix1)

225

226

227 output_csv_filename = os.path.join(output_dir,'transcribe_output_{}_{}.csv'.format(suffix,suffix1))

228

229 '''check result csv exist or not'''

230 if os.path.isfile(output_csv_filename) and not(args.reset):

231 df = pd.read_csv(output_csv_filename,index_col=0)

232 existSpeechFileList = df.filename.tolist()

233 choices_list = df.sentence.tolist()

234 else:

235 choices_list = []

236 existSpeechFileList = []

237

238 for b in range(begin_idx,end_idx):

239

240 timestamp_xls_filename = os.path.join(output_dir,'transcribe_timestamp{}_{:02d}.xlsx'.format(suffix,b+1))

241 timestamp_writer = pd.ExcelWriter(timestamp_xls_filename)

242

243 ''' check batch xlsx status 1. complete task 2. proccessing yet finished 3. new '''

244 titles = []

245 if os.path.isfile(timestamp_xls_filename):

246 print('load {} ...'.format(timestamp_xls_filename))

247

248 book = load_workbook(timestamp_xls_filename)

249

250 for ws in book.worksheets:

251 mmm = re.match(r'(.+)\.wav_(.+)', ws.title)

252 titles.append(os.path.join(input_dir,mmm.group(1)+ '.wav'))

253 titles = sorted(list(set(titles)))

254 print('number of sheets: {}'.format(len(titles)))

255 if len(titles) == batch_size or b == end_idx-1:

256 continue

257 else:

258 book = load_workbook(timestamp_xls_filename)

259 timestamp_writer.book = book

260 timestamp_writer.sheets = dict((ws.title, ws) for ws in book.worksheets)

261 speechFileQueue = sorted(list(set(entireSpeechFileList[b*batch_size:(b+1)*batch_size]) - set(titles)))

262

263 batch_transcribe_speechFile(speechFileQueue,existSpeechFileList,choices_list,timestamp_writer,args.nr,beta=args.beta)

264 else:

265 print('create new {} !!!'.format(timestamp_xls_filename))

266 speechFileQueue = entireSpeechFileList[b*batch_size:(b+1)*batch_size]

267

268 batch_transcribe_speechFile(speechFileQueue,existSpeechFileList,choices_list,timestamp_writer,args.nr,beta=args.beta)

以上程式寫在檔案transcribe_file_with_word_time_offsets.py