# CNN2

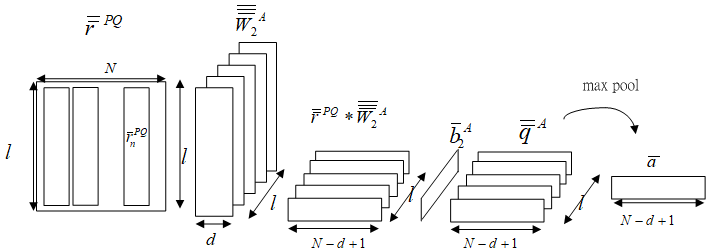

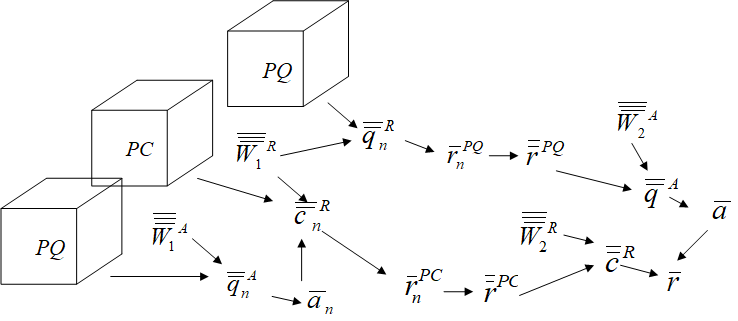

Fig.xx Derivation of sentence-level attention map from

Fig.xx shows the derivation of sentence-level attention map from query-based sentence features

is h_pool_PQ_1 in #CNN1.

is WQ_2

is convPQ_2

is bQ_2

is wPQ_2. is also wPQ_2.

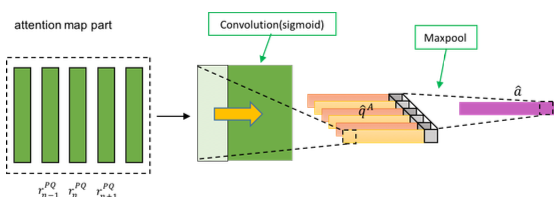

Fig.xx Derivation of sentence-level attention map from

Fig.xx Derivation of answer feature from

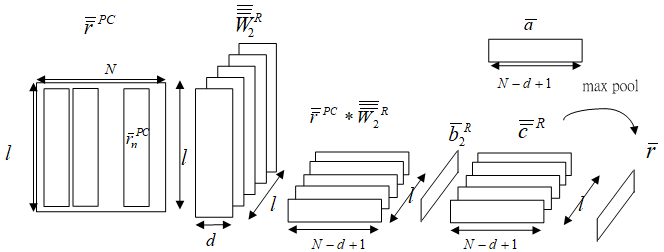

Fig.xx shows the derivation of answer feature from

is W2

is convPA_2, convPB_2, ..., convPE_2

is b2

is wPQ_2

is hiddenPA, hiddenPB, ..., hiddenPE

is pooledPA_2, pooledPB_2, ..., pooledPE_2

Fig.xx Derivation of answer feature from choice-based sentence feature

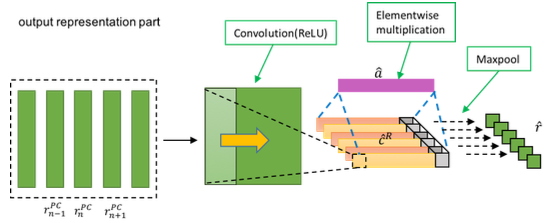

Fig.xx shows the output representation part of the second stage

Fig.xx Overall data flow of QACNN

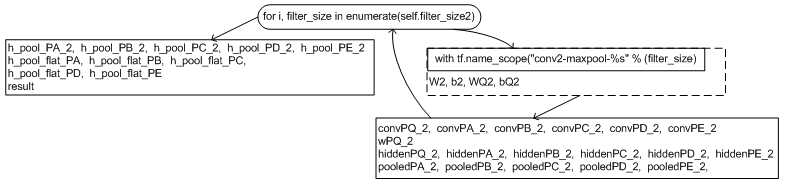

Fig.1 __init__() 函數中 # CNN2 # 流程

Fig.1 為 __init__() 函數中 # CNN2 # 流程

__init__() 函數中, # CNN2 # 部分 :

### CNN 2 ###

pooled_outputs_PQ_2 = []

pooled_outputs_PA_2 = []

pooled_outputs_PB_2 = []

pooled_outputs_PC_2 = []

pooled_outputs_PD_2 = []

pooled_outputs_PE_2 = []

num_filters_total = self.filter_num * len(self.filter_size)

for i, filter_size in enumerate(self.filter_size2):

with tf.name_scope("conv2-maxpool-%s" % (filter_size)):

filter_shape = [num_filters_total, filter_size, 1, self.filter_num2]

W2 = tf.get_variable(name="W2-%s"%(filter_size),shape = filter_shape,initializer = tf.contrib.layers.xavier_initializer())

b2 = tf.Variable(tf.constant(0.1, shape=[self.filter_num2]), name="b2")

WQ2 = tf.get_variable(name="WQ2-%s"%(filter_size),shape = filter_shape,initializer= tf.contrib.layers.xavier_initializer())

bQ2 = tf.Variable(tf.constant(0.1, shape=[self.filter_num2]), name="bQ2")

convPQ_2 = tf.nn.conv2d(

h_pool_PQ_1,

WQ2,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")## [batch, 1, (max_plot_len-filter_size+1), self.filter_num]

convPA_2 = tf.nn.conv2d(

h_pool_PA_1,

W2,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")## [batch, 1, (max_plot_len-filter_size+1), self.filter_num]

convPB_2 = tf.nn.conv2d(

h_pool_PB_1,

W2,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")## [batch, 1, (max_plot_len-filter_size+1), self.filter_num]

convPC_2 = tf.nn.conv2d(

h_pool_PC_1,

W2,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")## [batch, 1, (max_plot_len-filter_size+1), self.filter_num]

convPD_2 = tf.nn.conv2d(

h_pool_PD_1,

W2,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")## [batch, 1, (max_plot_len-filter_size+1), self.filter_num]

convPE_2 = tf.nn.conv2d(

h_pool_PE_1,

W2,

strides=[1, 1, 1, 1],

padding="VALID",

name="conv")## [batch, 1, (max_plot_len-filter_size+1), self.filter_num]

#hiddenPQ_2 = tf.nn.relu(tf.nn.bias_add(convPQ_2, b2), name="relu")

wPQ_2 = tf.transpose(tf.sigmoid(tf.nn.bias_add(convPQ_2,bQ2)),[0,3,2,1])

wPQ_2 = tf.nn.dropout(wPQ_2,self.dropoutRate)

wPQ_2 = tf.nn.max_pool(

wPQ_2,

ksize=[1,self.filter_num2, 1,1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool_pq_2") ## [batch_size,1,max_plot_len- filter_size+1,1]

wPQ_2 = tf.transpose(tf.tile(wPQ_2,[1,self.filter_num2,1,1]),[0,3,2,1])

hiddenPA_2 = tf.nn.relu(tf.nn.bias_add(convPA_2, b2), name="relu")*wPQ_2

hiddenPB_2 = tf.nn.relu(tf.nn.bias_add(convPB_2, b2), name="relu")*wPQ_2

hiddenPC_2 = tf.nn.relu(tf.nn.bias_add(convPC_2, b2), name="relu")*wPQ_2

hiddenPD_2 = tf.nn.relu(tf.nn.bias_add(convPD_2, b2), name="relu")*wPQ_2

hiddenPE_2 = tf.nn.relu(tf.nn.bias_add(convPE_2, b2), name="relu")*wPQ_2

hiddenPA_2 = tf.nn.dropout(hiddenPA_2,self.dropoutRate)

hiddenPB_2 = tf.nn.dropout(hiddenPB_2,self.dropoutRate)

hiddenPC_2 = tf.nn.dropout(hiddenPC_2,self.dropoutRate)

hiddenPD_2 = tf.nn.dropout(hiddenPD_2,self.dropoutRate)

hiddenPE_2 = tf.nn.dropout(hiddenPE_2,self.dropoutRate)

pooledPA_2 = tf.nn.max_pool(

hiddenPA_2,

ksize=[1, 1, (max_plot_len-filter_size+1), 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool") ## [batch_size, 1, 1, self.filter_num]

pooled_outputs_PA_2.append(pooledPA_2)

pooledPB_2 = tf.nn.max_pool(

hiddenPB_2,

ksize=[1, 1, (max_plot_len-filter_size+1), 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool") ##[batch_size, 1, 1, self.filter_num]

pooled_outputs_PB_2.append(pooledPB_2)

pooledPC_2 = tf.nn.max_pool(

hiddenPC_2,

ksize=[1, 1, (max_plot_len-filter_size+1), 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool") ##[batch_size, 1, 1, self.filter_num]

pooled_outputs_PC_2.append(pooledPC_2)

pooledPD_2 = tf.nn.max_pool(

hiddenPD_2,

ksize=[1, 1, (max_plot_len-filter_size+1), 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool") ##[batch_size, 1, 1, self.filter_num]

pooled_outputs_PD_2.append(pooledPD_2)

pooledPE_2 = tf.nn.max_pool(

hiddenPE_2,

ksize=[1, 1, (max_plot_len-filter_size+1), 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool") ##[batch_size, 1, 1, self.filter_num]

pooled_outputs_PE_2.append(pooledPE_2)