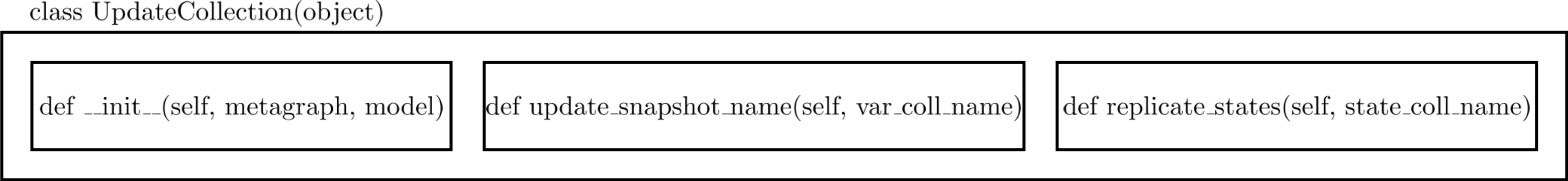

class UpdateCollection(object):

"""Update collection info in MetaGraphDef for AutoParallel optimizer."""

def __init__(self, metagraph, model):

self._metagraph = metagraph

self.replicate_states(model.initial_state_name)

self.replicate_states(model.final_state_name)

self.update_snapshot_name("variables")

self.update_snapshot_name("trainable_variables")

def update_snapshot_name(self, var_coll_name):

var_list = self._metagraph.collection_def[var_coll_name]

for i, value in enumerate(var_list.bytes_list.value):

var_def = variable_pb2.VariableDef()

var_def.ParseFromString(value)

# Somehow node Model/global_step/read doesn't have any fanout and seems to

# be only used for snapshot; this is different from all other variables.

if var_def.snapshot_name != "Model/global_step/read:0":

var_def.snapshot_name = with_autoparallel_prefix(

0, var_def.snapshot_name)

value = var_def.SerializeToString()

var_list.bytes_list.value[i] = value

def replicate_states(self, state_coll_name):

state_list = self._metagraph.collection_def[state_coll_name]

num_states = len(state_list.node_list.value)

for replica_id in range(1, FLAGS.num_gpus):

for i in range(num_states):

state_list.node_list.value.append(state_list.node_list.value[i])

for replica_id in range(FLAGS.num_gpus):

for i in range(num_states):

index = replica_id * num_states + i

state_list.node_list.value[index] = with_autoparallel_prefix(

replica_id, state_list.node_list.value[index])

Figure 1: Schematic of class UpdateCollection

Figure 1: Schematic of class UpdateCollection