function transcribe_file_with_word_time_offsets()

Fig.1 Flowchart of function

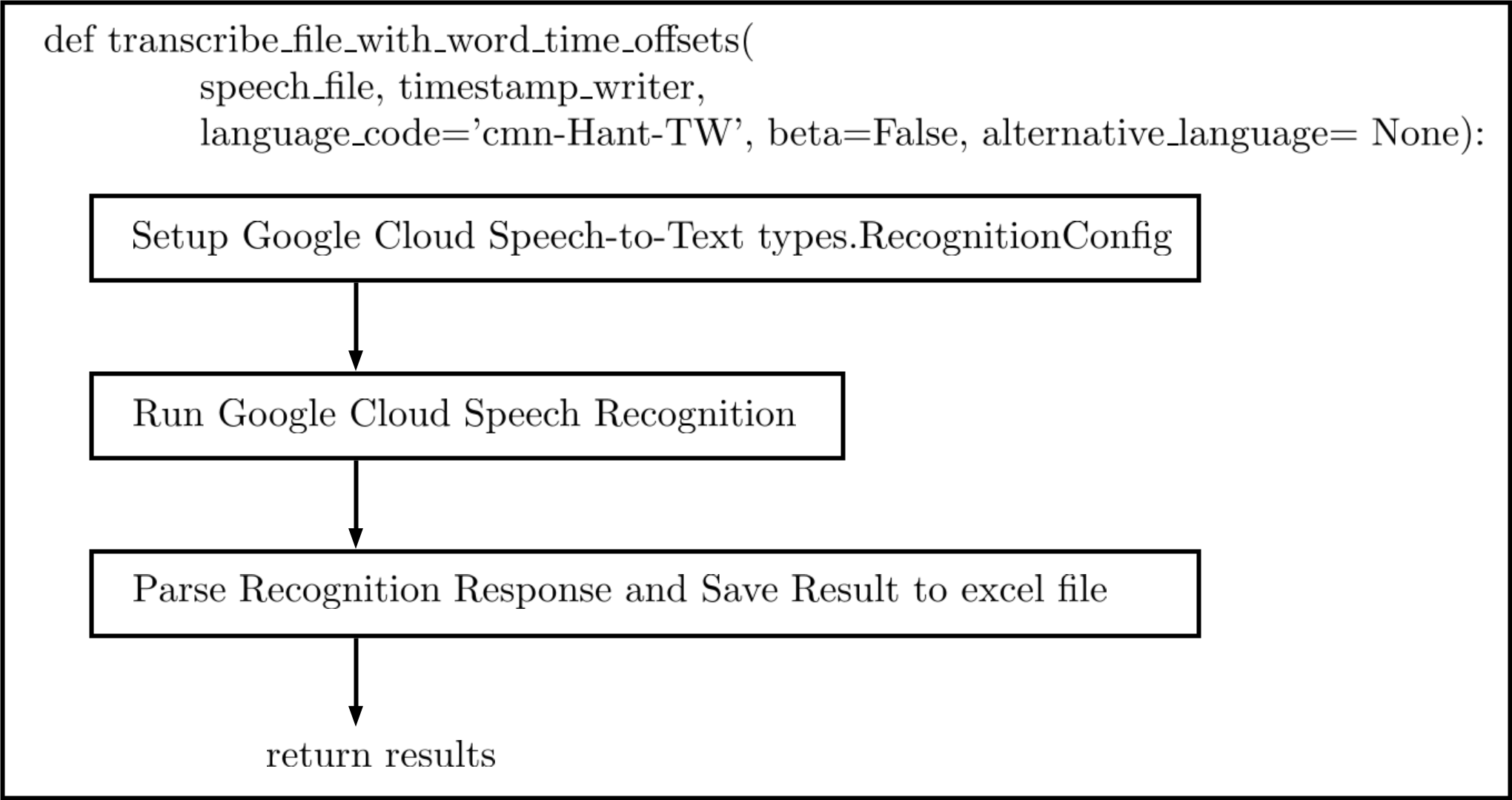

Fig.1 Flowchart of function transcribe_file_with_word_time_offsets

Fig.1呈現函數transcribe_file_with_word_time_offsets的流程圖。

函數transcribe_file_with_word_time_offsets的輸入參數包含

speech_file音頻的檔名timstamp_writerxlsx的檔名language_code轉譯的語言betagoogle cloud api beta版?alternative_language替代語言(沒有使用)

函數transcribe_file_with_word_time_offsets有三大程式區塊

- Setup Google Cloud Speech-to-Text types.RecognitionConfig

- Run Google Cloud Speech Recognition

- Parse Recognition Response and Save Result to excel file

函數transcribe_file_with_word_time_offsets把文件轉譯結果存進results變數裡輸出,在transcribe_file_with_word_time_offset.py裡,是返回函數batch_transcribe_speechFile (行143)。

045 def transcribe_file_with_word_time_offsets(speech_file, timestamp_writer, language_code='cmn-Hant-TW', beta=False, alternative_language= None):

046 """Transcribe the given audio file synchronously and output the word time

047 offsets."""

048 samp_freq, _ = wavfile.read(speech_file)

049 if beta:

050 from google.cloud import speech_v1p1beta1 as speech

051 from google.cloud.speech_v1p1beta1 import enums

052 from google.cloud.speech_v1p1beta1 import types

053

054 config = types.RecognitionConfig(

055 encoding=enums.RecognitionConfig.AudioEncoding.LINEAR16,

056 sample_rate_hertz=samp_freq,

057 language_code=language_code,

058 #alternative_language_codes=['en-US'],

059 alternative_language_codes = alternative_language,

060 enable_word_time_offsets=True,

061 speech_contexts=[types.SpeechContext(

062 phrases=['四', '三', '二', '一'],

063 )],

064 enable_word_confidence=True

065 )

066

067 else:

068 from google.cloud import speech

069 from google.cloud.speech import enums

070 from google.cloud.speech import types

071

072 config = types.RecognitionConfig(

073 encoding=enums.RecognitionConfig.AudioEncoding.LINEAR16,

074 sample_rate_hertz=samp_freq,

075 language_code=language_code,

076 enable_word_time_offsets=True,

077 speech_contexts=[types.SpeechContext(

078 phrases=['四', '三', '二', '一'],

079 )],

080 #enable_word_confidence=True

081 )

082

083 client = speech.SpeechClient()

084

085 with io.open(speech_file, 'rb') as audio_file:

086 content = audio_file.read()

087

088 audio = types.RecognitionAudio(content=content)

089

090 response = client.recognize(config, audio)

091

092 _, filename = parse_filepath(speech_file)

093 n = re.match(r'C(.+)\.wav', filename)

094 audio_index = n.group(1)

095 results = []

096 for i, result in enumerate(response.results):

097 alternative = result.alternatives[0]

098 print(u'Transcript {}_{}: {}'.format(audio_index, i+1, alternative.transcript))

099 results.append(alternative.transcript)

100

101 word_index = []

102 start_time_column = []

103 end_time_column = []

104 confidences_list = []

105 for word_info in alternative.words:

106 word = word_info.word

107 start_time = word_info.start_time

108 end_time = word_info.end_time

109 word_index.append(word)

110 start_time_column.append(start_time.seconds + start_time.nanos * 1e-9)

111 end_time_column.append(end_time.seconds + end_time.nanos * 1e-9)

112 if hasattr(word_info, 'confidence'):

113 confidences_list.append(word_info.confidence)

114 else:

115 confidences_list.append(float('nan'))

116

117 df = pd.DataFrame({'start_time':start_time_column, 'end_time':end_time_column, 'confidence':confidences_list}, index=word_index)

118 #print(df)

119 df.index.name = 'word'

120 df.to_excel(timestamp_writer,'{}_{}'.format(filename, i+1))

121

122 return results