A neural network with a recurrent attention model over a possibly large external memory, which is a form of Memory Network. But unlike the previous model, it is trained end-to-end, and hence requires significantly less supervision during training, making it more generally applicable in realistic settings. It can be seen as an extension of RNNsearch to the case where multiple computational steps (hops) are performed per output symbol.

Approach

Our model t

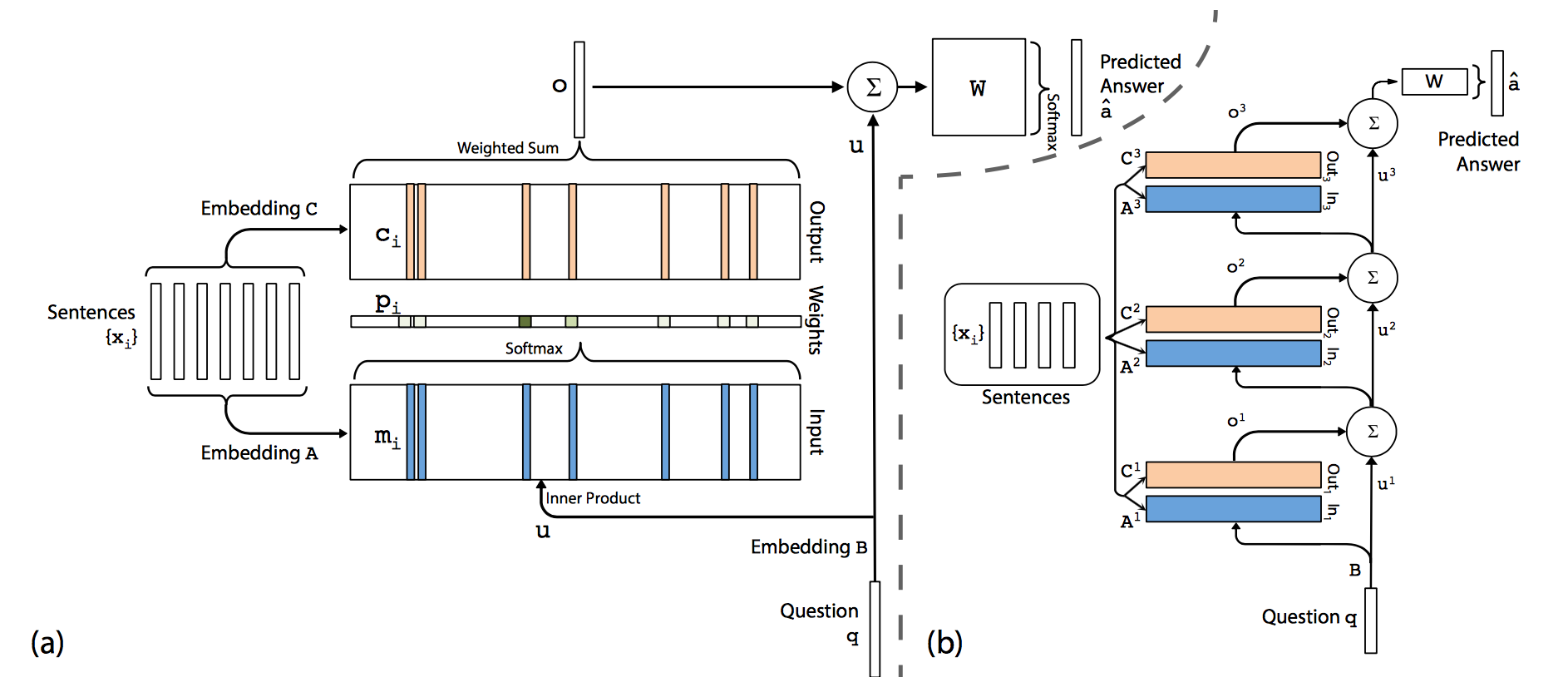

Figure 1: (a): A single layer version of our model. (b) A three layer version of our model. In practice, we can constrain several of the embedding matrices to the the same (see section 2.2)

Figure 1: (a): A single layer version of our model. (b) A three layer version of our model. In practice, we can constrain several of the embedding matrices to the the same (see section 2.2)

[0] End-To-End Memory Networks

Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C Lawrence Zitnick, and Devi Parikh. 2015. VQA: Visual question answering, In ICCV.

Langzhou Chen, Mark J. F. Gales, and K. K. Chin. 2011. Constrained discriminative mapping transforms for unsupervised speaker adaption. In ICASSP.

Laura Chiticariu, Rajasekar Krishnamurthy, Yunyao Li, Frederick Reiss, and Shivakumar Vaithyanathan. 2010. Domain adaption of rule-based annotators for name-entity recognition tasks. In EMNLP.

Yu-An Chung and James Glass. 2017. Learning word embeddings from speech. In NIPS ML4Audio Workshop.

Yu-An Chung, Chao-Chung Wu, Chia-Hao Shen, Hung-Yi Lee, and Lin-Shan Lee. 2016. Audio word2vec: Unsupervised learning of audio segment representations using sequence-to-sequence auto encoder. In INTERSPEECH.

Mortaza Doulaty, Oscar Saz, and Thomas Hain. 2015. Data-selective transfer learning for multi-domain speech recognition. In INTERSPEECH.

Has Fang, Sarah Gupta, Forrest Iandola, Rupesh K. Srivastava, Li Deng, Piotr Dollr, Jianfeng Gao, Xiaodong He, Margaret Mitchell, John C Platt, et al. 2015. From captions to visual concepts and back. In CVPR.

Wei Fang, Juei-Yang Hsu, Hung-Yi Lee, and Lin-Shan Lee. 2016. Hierarchical attention model for improved machine comprehension of spoken content. In SLT.

Akira Fukui, Dong Huk Park, Daylen Yang, Anna Rohrbach, Trevor Darrell, and Marcus Rohrbach. 2016. Multimodal compact bilinear pooling for visual question answering and visual grounding. In EMNLP.

David Golub, Po-Sen Huang, Xiaodong He, and Li Deng. 2017. Two-stage synthesis networks for transfer learning in machine. In EMNLP.

Jui-Ting Huang, Jinyu Li, Dong Yu, Li Deng, and Yi-fan Gong. 2013. Cross-language knowledge transfer using multilingual deep neural network with shared hidden layers In ICASSP.

Rudolf Kadlec, Ondrej Bajgar, and Jan Kleindienst. 2016 From particular to general: A preliminary case study of transfer learning in reading comprehension. In NIPS Machine Intelligence Workshop.

Hung-Yi Lee, Yu-Yu Chou, Yow-Bang Wang, Lin-Shan Lee. 2013. Unsupervised domain adaption for spoken document summarization with structured support vector machine. In ICASSP.

Tzu-Chien Liu, Yu-Hsueh Wu, and Hung-Yi Lee. 2017. Query-based attention CNN for text similarity map. In ICCV Workshop.

Jiasen Lu, Caiming Xiong, Devi Parikh, and Richard Socher. 2017. Knowing when to look: Adaptive attention via a visual sentinel for image captioning.

David McClosky, Eugene Charniak, and Mark Johnson. 2010. Automatic domain adaption for parsing. In NAACL HLT.

Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S. Corrado, and Jeff Dean. 2013. Distributed representations of words and phrases and their compositionality. In NIPS.

Sewon Min, Minjoon Seo, and Hannaneh Hajishirzi. 2017. Question answering through transfer learning from large fine-grained supervision data. In ACL.

Lili Mou, Zhao Meng, Rui Yan, Ge Li, Yan Xu, Lu Zhang, and Zhi Jin. 2016. How transferable are neural networks in nlp application? In ENNLP.

Sinno Jialin Pan and Qiang Yang. 2010. A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering 22(10): 1345-1359.

Jeffrey Pennington, Richard Socher, and Christopher Manning. 2014. Glove: Global vectors for word representation. In EMNLP.

Pranav Rajpurkar, Jian Zhang, Konstantin Lopyrev, and Percy Liang. 2016. SQuAD: 100,000+ questions for machine comprehension of text. In EMNLP.

Matthew Richardson, Christopher J. C. Burges, and Renshaw. 2013. MCTest: A challenge dataset for the open-domain machine comprehension of text. In EMNLP.

Olga Russakovsky, Jia Deng, Hat Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. 2015. Imagenet large scale visual recognition challenge. International Journal of Computer Vision 115(3):211-252.

Ali Sharif Razavian, Hossein Azizpour, Josphine Sullivan, adn Stefan Carlsson. 2014. CNN feature off-the-shelf: An astounding baseline for recognition. In CVPRW.

Sainbayar Sukhbaatar, Arthur Szlam, Jason Weston, and Rob Fergus. 2015. End-to-end memory networks. In NIPS.

Makarand Tapaswi, Yukon Zhu, Rainer Stiefelhagen, Antonio Torralba, Raquel Urtasu, and Sanja Fiedler. 2016. MovieQA: Understanding stories in movies through question-answering. In CVPR.

Adam Trischler, Tong Wang, Singdi Yuan, Justin Harris, Alessandro Sordoni, Philip Bachman, and Kaheer Suleman. 2017. NewsQA: A machine comprehension dataset. In RepL4NLP.

Adam Trischler, Zheng Ye, and Xingdi Yuan. 2016. A parallel-hierarchical model for machine comprehension on sparse data. In ACL.

Bo-Hsiang Tseng, Shen-Syan Shen, Hung-Yi Lee, and Lin-Shan Lee. 2016. Towards machine comprehension of spoken content: Initial FOFEL listening comprehension test by machine. In INTERSPEECH.

Willie Walker, Paul Lamere, Philip Kwok, Bhiksha Raj,Rita Singh, Evandro Gouvea, Peter Wolf, and Joe Woelfel. 2004. Sphinx-4: A flexible open source framework for speech recognition. Technical report.

R. Wallace, Thambiratnam K., and F. Seide. 2009. Unsupervised speaker adaptation for telephone call transcription. In ICASSP.

Hai Wang, Motiv Bansal, Kevin Gimpel, and David McAllester. 2015. Machine comprehension with syntax, frames, and semantics. In ACL.

Jason Weston, Antoine Bordes, Sumit Chopra, Alexander M Rush, Bart van Merrinboer, Armand Joulin, and Tomas Mikolov. 2016. Towards AI-complete question answering: A set of prerequisite toy tasks. In ICLR.

Georg Wiese, Dirk Weissenborn, and Mariana Neves. 2017. Neural domain adaptation for biomedical question answering. In CoNLL.

Huijuan Xu and Kate Sank. 2016. Ask, attend and answer: Exploring question-guided spatial attention for visual question answering. In ECCV.

Yi-Yang, Wen-Tau Yih, and Christopher Meek. 2015. WikiQA: A challenge dataset for open-domain question-answering. In EMNLP.

Zhilin Yang, Russian Salakhutdinov, and William W. Cohen. 2017. Transfer learning for sequence tagging with hierarchical recurrent networks. In ICLR.

Zichao Yang, Xiaodong He, Jianfeng Gao, Li Deng, and Alex Smola. 2016. Stacked attention networks for image question answering. In CVPR.

Jason Yosinski, Jeff Clune, Yoshua Bengio, and Hod Lipson. 2014. How transferable are features in deep networks? In NIPS.

Yuan Zhang, Regina Barzilay, and Tommi Jaakkola. 2017. Aspect-augmented adversarial networks for domain adaptation. Transactions of the Association for Computational Linguistic 5:515-528.